01

Features

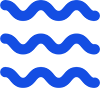

Monitor, analyze, then optimize

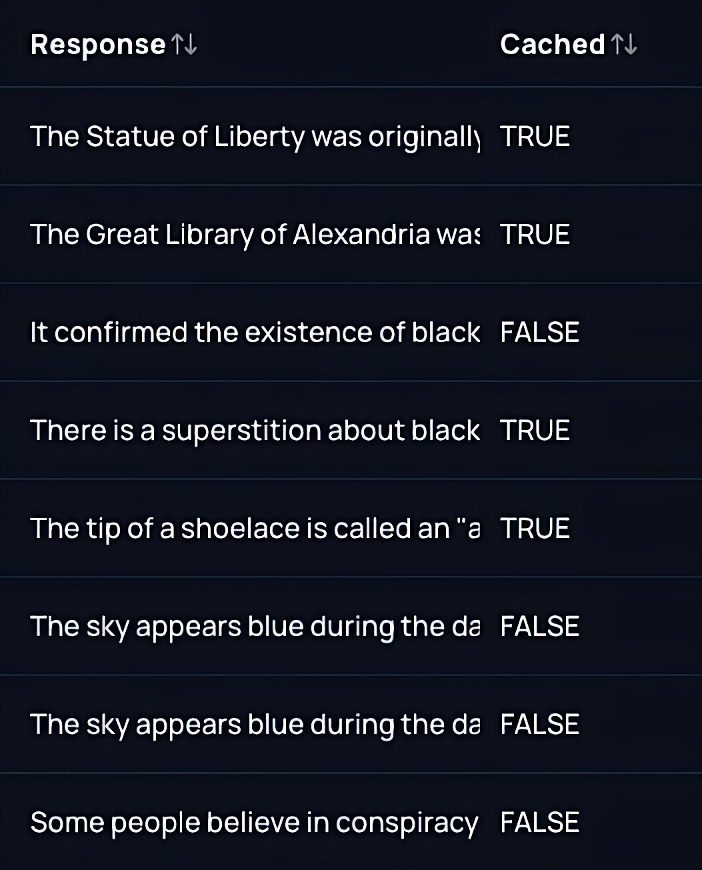

Usage monitoring

Track and analyze usage, requests, tokens, cache, and more.

Cost analytics

Understand how much users, requests and models are costing you.

LLM optimization

Easily add remote cache, rate limits, and auto retries.

02

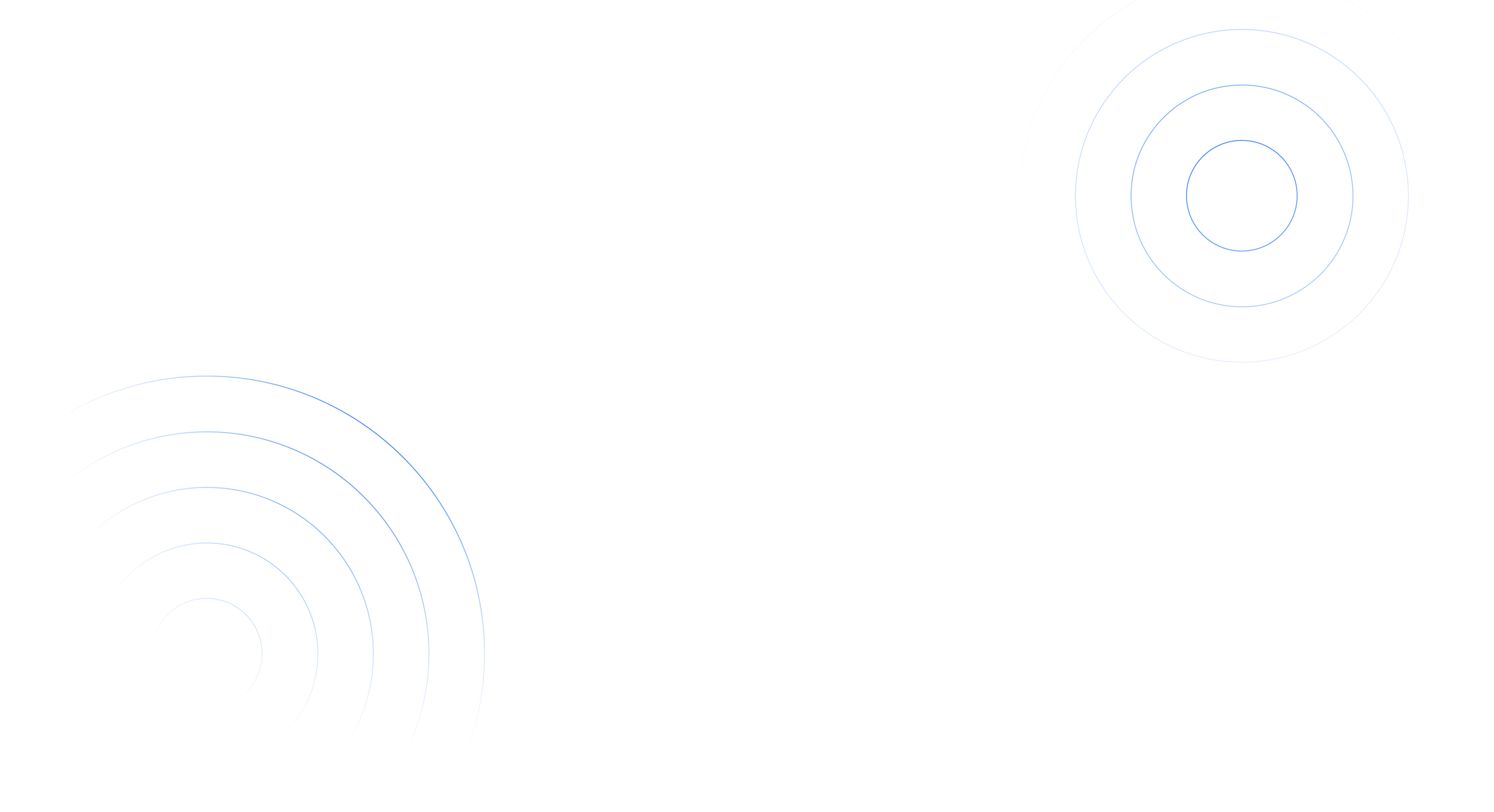

· Analytics

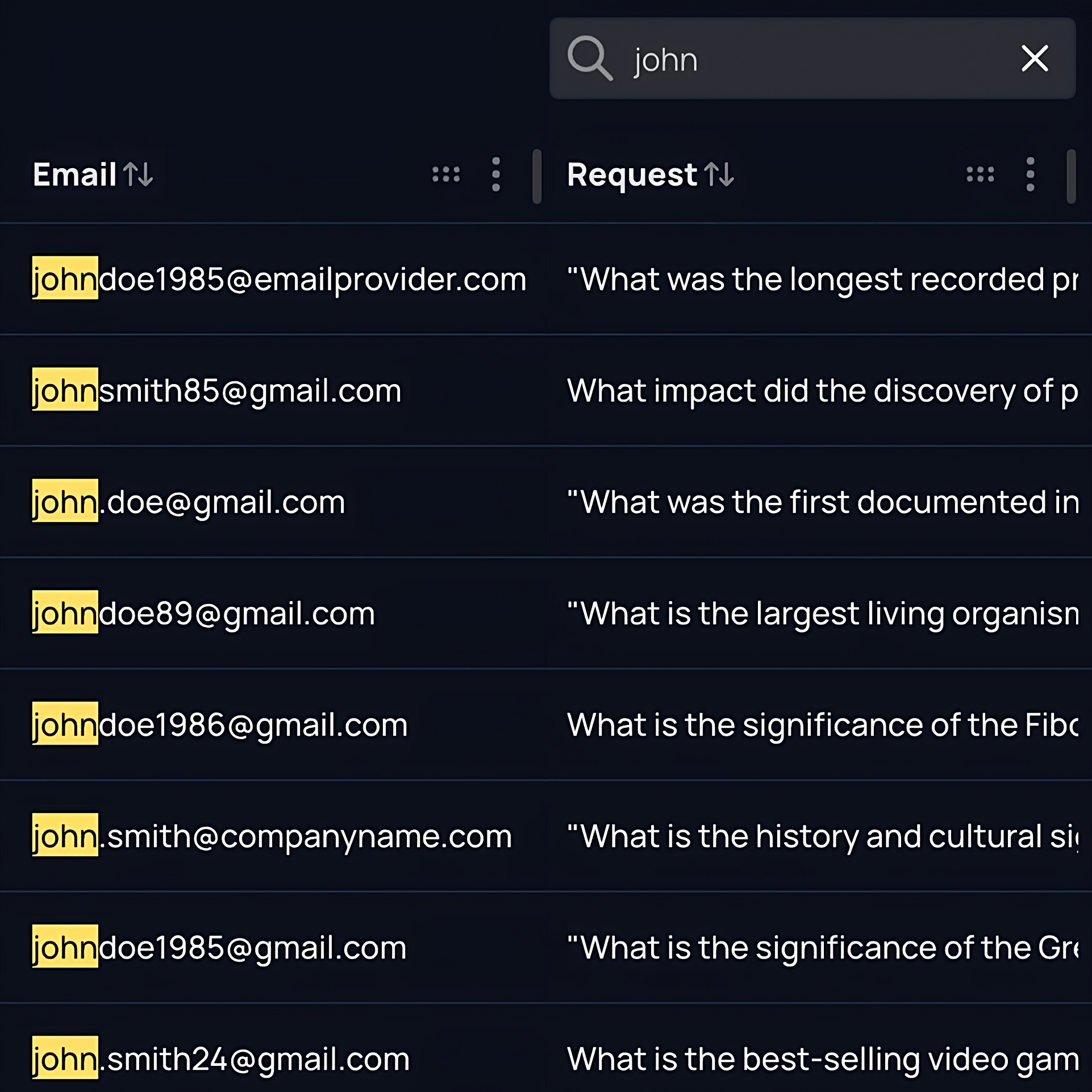

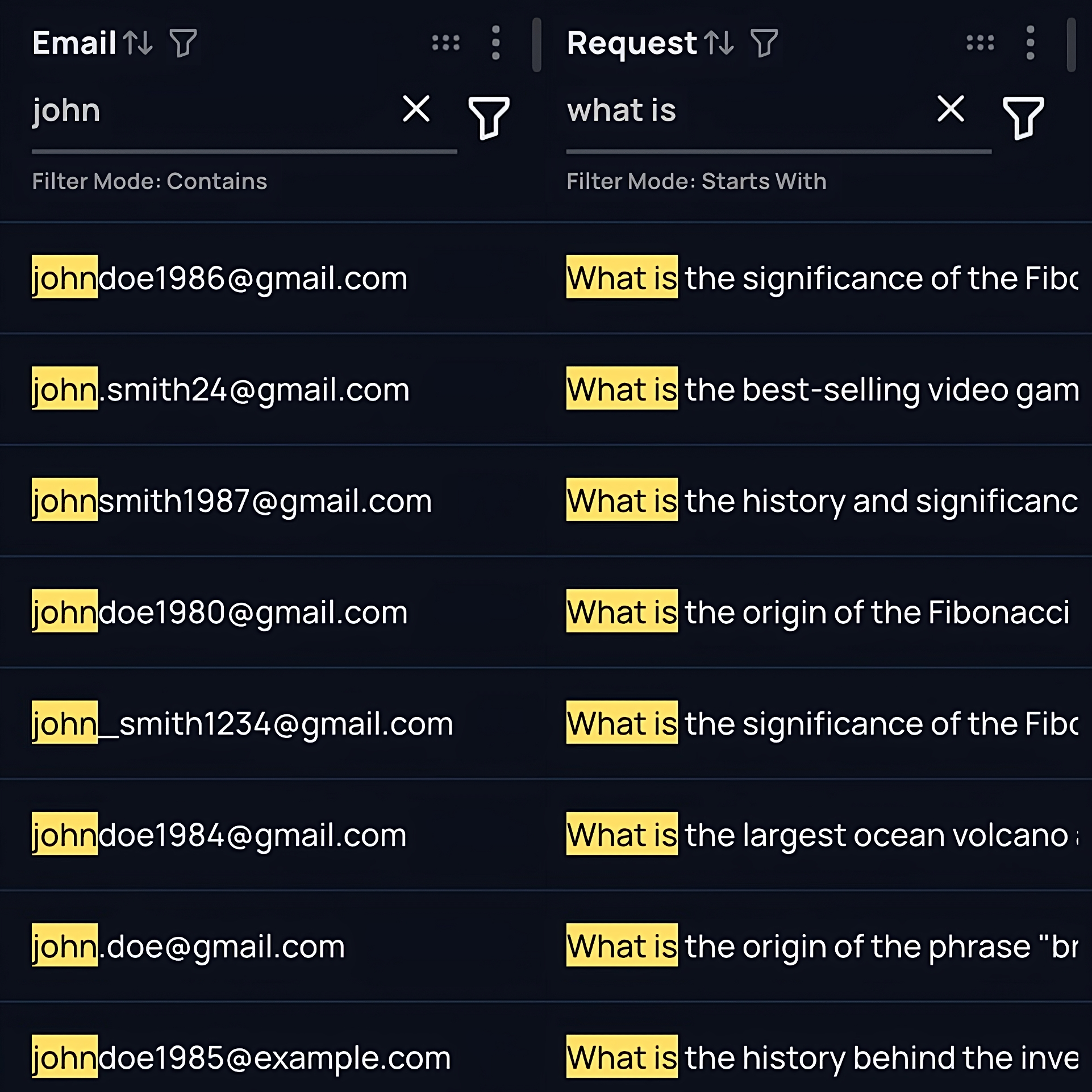

Search and filter through your data

Search by keyword

Use our powerful search function to find keywords across all of your data tables.

Filter by column

Look up keywords with exact or fuzzy match, filtering as many columns as you need.

03

· Setup

Setup with two lines of code

Update the base URL and you're done — here's an example API request with OpenAI.

1

curl --request POST \

2

--url https://openai.flowstack.ai/v1/chat/completions \

3

--header 'Authorization: Bearer OPENAI_API_KEY' \

4

--header 'Flowstack-Auth: Bearer FLOWSTACK_API_KEY' \

5

--header 'Content-Type: application/json' \

6

--data '{

7

"model": "gpt-3.5-turbo",

8

"messages": [

9

{"role": "system", "content": "Hello!"}

13

],

16

}'

1

import OpenAI from "openai";

2

3

const openai = new OpenAI({

4

apiKey: "YOUR_OPENAI_KEY",

5

baseURL: "https://openai.flowstack.ai/v1",

6

defaultHeaders: {

7

"Flowstack-Auth": "Bearer YOUR_FLOWSTACK_KEY",

8

},

9

});

10

11

const chatCompletion = await openai.chat.completions.create({

12

messages: [{ role: "user", content: "Hello!" }],

13

model: "gpt-3.5-turbo",

14

});

1

import openai

2

3

openai.api_base = "https://openai.flowstack.ai/v1"

4

openai.api_key = "YOUR_OPENAI_KEY"

5

6

response = openai.ChatCompletion.create(

7

headers={"Flowstack-Auth": "Bearer YOUR_FLOWSTACK_KEY"},

8

model="gpt-3.5-turbo",

9

messages=[{"role": "user", "content": "Hello!"}]

10

)

04

· pricing

Use Flowstack for free

Flowstack is completely free — all we ask in return is feedback to help us get better.

Use Flowstack for free

Unlimited API requests

Analytics dashboard and data tables

Remote caching and rate limits

Priority support

Enterprise

Custom

Specialized or white-label solutions

Custom setups such as:

SLAs and enhanced security

Optional self-hosting on AWS, GCP or Azure

White-label and embedded analytics

Dedicated support

05

Compatibility

Monitor all your LLMs from one place

Compatible with OpenAI, Anthropic, AI21, AWS Bedrock, GCP Vertex AI, and Mistral.

Available

now

now

Available

now

now

Available

now

now

Available

now

now

Available

now

now

Available

now

now